unikraft文件系统导览 文件系统操作调用层次 注:本调用以9pfs与文件读取函数(read)为例

文件操作在unikraft中的调用层次,总的来说可以分为5层,分别为c语言标准库函数,vfscore虚拟文件系统,9pfs,uk9p以及plat。

1 2 3 4 5 6 7 8 9 c语言标准库函数 --------------- vfscore --------------- 9pfs --------------- uk9p --------------- plat/virtio

具体的函数调用流程如下所示:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 libs/vfscore/main.c: UK_SYSCALL_R_DEFINE(ssize_t,read, ....) ------------------------------------------------------------------------ libs/vfscore/main.c: UK_SYSCALL_R_DEFINE(ssize_t, readv, ...) ------------------------------------------------------------------------ libs/vfscore/main.c: static ssize_t do_preadv(.....) ------------------------------------------------------------------------ libs/vfscore/syscalls.c: int sys_read(struct vfscore_file *fp, .....) ------------------------------------------------------------------------ libs/vfscore/fops: int vfs_read(struct vfscore_file *fp, ......) ------------------------------------------------------------------------ libs/vfscore/include/vfscore/vnode.h: #define VOP_READ(VP, FP, U, F) ..... ------------------------------------------------------------------------ libs/9pfs/9pfs_vnops.c: static int uk_9pfs_read(struct vnode *vp, ....) ------------------------------------------------------------------------ libs/uk9p/9p.c: int64_t uk_9p_read(struct uk_9pdev *dev, ....) ------------------------------------------------------------------------ libs/uk9p/9p.c: static inline int send_and_wait_zc(struct uk_9pdev *dev, ..) ------------------------------------------------------------------------ libs/uk9p/9pdev.c: int uk_9pdev_request(struct uk_9pdev *dev, ....) ------------------------------------------------------------------------ plat/drivers/virtio_9p.c: static int virtio_9p_request(....) ------------------------------------------------------------------------ plat/drivers/virtio_pci.c: static int vpci_legacy_notify(....) ------------------------------------------------------------------------ plat/drivers/include/virtio/virtio_config.h: static inline void _virtio_cwrite_bytes(....) ------------------------------------------------------------------------ plat/commom/include/arm/arm64/cpu.h: static inline void ioreg_write8(....)

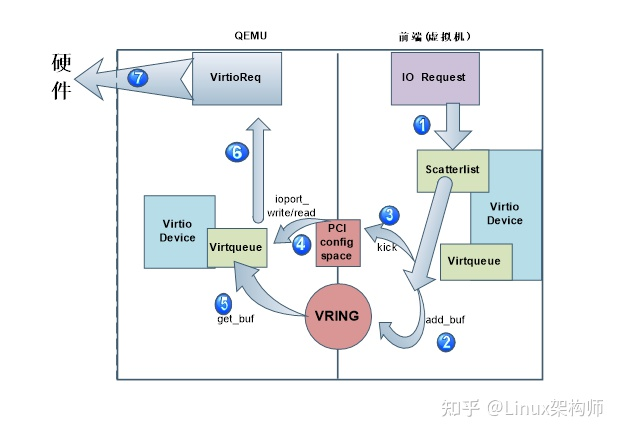

这篇文章讲了一些关于virtio的东西https://zhuanlan.zhihu.com/p/542483879

这里把里面的一张图拿出来:

这张图比较简单粗暴地概括了整个文件系统操作的调用层次。

vfscore c语言函数标准库中,read的定义如下:

1 ssize_t read (int fd, void *buf, size_t count)

该函数的功能是读文件描述符为fd的文件,将其中的count个字节读入以buf指针为起始的内存中

而在unikraft中,其被定义在了vfscore/main.c下,使用了宏定义的模式定义该函数,该函数在main.c中的定义及实现如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 UK_SYSCALL_R_DEFINE(ssize_t , read, int , fd, void *, buf, size_t , count) { ssize_t bytes; UK_ASSERT(buf); struct iovec iov = .iov_base = buf, .iov_len = count, }; trace_vfs_read(fd, buf, count); bytes = uk_syscall_r_readv((long ) fd, (long ) &iov, 1 ); if (bytes < 0 ) trace_vfs_read_err(bytes); else trace_vfs_read_ret(bytes); return bytes; }

在该函数中,定义了一个iovec,作为读数据的缓冲区,而该缓冲区的iov_base指向了传入的参数buf,iov_len的长度为传入的参数count,所以接下来向该iovec读入数据就等同于对buf指针读入数据。然后调用函数uk_syscall_r_readv。

uk_syscall_r_readv的定义及实现如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 UK_SYSCALL_R_DEFINE(ssize_t , readv, int , fd, const struct iovec *, iov, int , iovcnt) { struct vfscore_file *fp ; ssize_t bytes; int error; trace_vfs_readv(fd, iov, iovcnt); error = fget(fd, &fp); if (error) { error = -error; goto out_error; } if (fp->f_offset < 0 && (fp->f_dentry == NULL || fp->f_dentry->d_vnode->v_type != VCHR)) { error = -EINVAL; goto out_error_fdrop; } error = do_preadv(fp, iov, iovcnt, -1 , &bytes); out_error_fdrop: fdrop(fp); if (error < 0 ) goto out_error; trace_vfs_readv_ret(bytes); return bytes; out_error: trace_vfs_readv_err(error); return error; }

该函数主要判断了vfscore_file中的各项属性如指针偏移,dentry目录项以及文件类型是否合法,若不合法则报错返回error,若合法则进入下一层调用do_preadv。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 static ssize_t do_preadv (struct vfscore_file *fp, const struct iovec *iov, int iovcnt, off_t offset, ssize_t *bytes) { size_t cnt; int error; UK_ASSERT(fp && iov); error = sys_read(fp, iov, iovcnt, offset, &cnt); if (has_error(error, cnt)) goto out_error; *bytes = cnt; return 0 ; out_error: return -error; }

而do_preadv中则是直接了当地调用了sys_read。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 int sys_read (struct vfscore_file *fp, const struct iovec *iov, size_t niov, off_t offset, size_t *count) { int error = 0 ; struct iovec *copy_iov ; if ((fp->f_flags & UK_FREAD) == 0 ) return EBADF; size_t bytes = 0 ; const struct iovec *iovp = for (unsigned i = 0 ; i < niov; i++) { if (iovp->iov_len > IOSIZE_MAX - bytes) { return EINVAL; } bytes += iovp->iov_len; iovp++; } if (bytes == 0 ) { *count = 0 ; return 0 ; } struct uio uio ; copy_iov = calloc (sizeof (struct iovec), niov); if (!copy_iov) return ENOMEM; memcpy (copy_iov, iov, sizeof (struct iovec)*niov); uio.uio_iov = copy_iov; uio.uio_iovcnt = niov; uio.uio_offset = offset; uio.uio_resid = bytes; uio.uio_rw = UIO_READ; error = vfs_read(fp, &uio, (offset == -1 ) ? 0 : FOF_OFFSET); *count = bytes - uio.uio_resid; free (copy_iov); return error; }

在sys_read中首先对传入的所有iovec进行了iov_len判断,判断其iov_len加起来会不会超过最大io值IOSIZE_MAX,若超过,则返回报错。然后判断实际的io长度是否为0,若为0说明不需要从下层读取数据,直接返回。最后通过iovec指针iov,iovec的数量niov,文件指针偏移量offset、总读取数量以及uio的读写模式构造uio对象,再调用vfs_read函数进入下层。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 int vfs_read (struct vfscore_file *fp, struct uio *uio, int flags) { struct vnode *vp = int error; size_t count; ssize_t bytes; bytes = uio->uio_resid; vn_lock(vp); if ((flags & FOF_OFFSET) == 0 ) uio->uio_offset = fp->f_offset; error = VOP_READ(vp, fp, uio, 0 ); if (!error) { count = bytes - uio->uio_resid; if (((flags & FOF_OFFSET) == 0 ) && !(fp->f_vfs_flags & UK_VFSCORE_NOPOS)) fp->f_offset += count; } vn_unlock(vp); return error; }

调用VOP_READ宏,也就是vnode的读文件函数,并在调用完毕之后将文件指针向后移动count(count即读入的长度),意思就是已经从文件读了count长度的内容,所以在本次打开文件的下次读该文件就应该从移动后的文件指针开始读。

9pfs vfscore中的VOP_READ宏是对vnode中的vop_read的封装,便于给上层进行调用。而在9pfs中则对vops中的各种操作进行了实现:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 struct vnops uk_9pfs_vnops = .vop_open = uk_9pfs_open, .vop_close = uk_9pfs_close, .vop_read = uk_9pfs_read, .vop_write = uk_9pfs_write, .vop_seek = uk_9pfs_seek, .vop_ioctl = uk_9pfs_ioctl, .vop_fsync = uk_9pfs_fsync, .vop_readdir = uk_9pfs_readdir, .vop_lookup = uk_9pfs_lookup, .vop_create = uk_9pfs_create, .vop_remove = uk_9pfs_remove, .vop_rename = uk_9pfs_rename, .vop_mkdir = uk_9pfs_mkdir, .vop_rmdir = uk_9pfs_rmdir, .vop_getattr = uk_9pfs_getattr, .vop_setattr = uk_9pfs_setattr, .vop_inactive = uk_9pfs_inactive, .vop_truncate = uk_9pfs_truncate, .vop_link = uk_9pfs_link, .vop_cache = uk_9pfs_cache, .vop_fallocate = uk_9pfs_fallocate, .vop_readlink = uk_9pfs_readlink, .vop_symlink = uk_9pfs_symlink, .vop_poll = uk_9pfs_poll, };

所以上层的VOP_READ实际上对应的就是9pfs中的uk_9pfs_read

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 static int uk_9pfs_read (struct vnode *vp, struct vfscore_file *fp, struct uio *uio, int ioflag __unused) { struct uk_9pdev *dev = struct uk_9pfid *fid = struct iovec *iov ; int rc; if (vp->v_type == VDIR) return EISDIR; if (vp->v_type != VREG) return EINVAL; if (uio->uio_offset < 0 ) return EINVAL; if (uio->uio_offset >= (off_t ) vp->v_size) return 0 ; if (!uio->uio_resid) return 0 ; iov = uio->uio_iov; while (!iov->iov_len) { uio->uio_iov++; uio->uio_iovcnt--; } rc = uk_9p_read(dev, fid, uio->uio_offset, iov->iov_len, iov->iov_base); if (rc < 0 ) return -rc; iov->iov_base = (char *)iov->iov_base + rc; iov->iov_len -= rc; uio->uio_resid -= rc; uio->uio_offset += rc; return 0 ; }

在vnode和mount的结构体中,都分别有一个void *类型的data,里面存放的是私有数据,实际上vnode里面的data存放的是9p文件系统中的uk_9pfs_file_data,而mount中的data则存放的是uk_9pfs_mount_data。uk_9pfs_file_data中存放的是与9p文件相关的信息,uk_9pfs_mount_data中存放的是与9pfs挂载设备相关的信息。

uk9p 在uk_9pfs_read中调用了uk_9p_read函数

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 int64_t uk_9p_read (struct uk_9pdev *dev, struct uk_9pfid *fid, uint64_t offset, uint32_t count, char *buf) { struct uk_9preq *req ; int64_t rc; if (fid->iounit != 0 ) count = MIN(count, fid->iounit); count = MIN(count, dev->msize - 11 ); uk_pr_debug("TREAD fid %u offset %lu count %u\n" , fid->fid, offset, count); req = request_create(dev, UK_9P_TREAD); if (PTRISERR(req)) return PTR2ERR(req); if ((rc = uk_9preq_write32(req, fid->fid)) || (rc = uk_9preq_write64(req, offset)) || (rc = uk_9preq_write32(req, count)) || (rc = send_and_wait_zc(dev, req, UK_9PREQ_ZCDIR_READ, buf, count, 11 )) || (rc = uk_9preq_read32(req, &count))) goto out; uk_pr_debug("RREAD count %u\n" , count); rc = count; out: uk_9pdev_req_remove(dev, req); return rc; }

而在uk_9p_read中则是使用了plan 9协议,将请求头序列化进uk_9preq中,然后通过send_and_wait_zc函数发送请求。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 static inline int send_and_wait_zc (struct uk_9pdev *dev, struct uk_9preq *req, enum uk_9preq_zcdir zc_dir, void *zc_buf, uint32_t zc_size, uint32_t zc_offset) { int rc; if ((rc = uk_9preq_ready(req, zc_dir, zc_buf, zc_size, zc_offset))) return rc; uk_9p_trace_ready(req->tag); if ((rc = uk_9pdev_request(dev, req))) return rc; uk_9p_trace_sent(req->tag); if ((rc = uk_9preq_waitreply(req))) return rc; uk_9p_trace_received(req->tag); return 0 ; }

在send_and_wait_zc函数中,可以发现有三个步骤。首先是调用uk_9preq_ready将请求req标记为准备状态,具体是根据zc_dir的类型(也就是读或写)分别进行不同的初始化(主要针对于zc_buf,zc_size以及zc_offset),使得req的成员变量的值能够达到发送write内容或接收read内容的状态。第二个步骤是调用uk_9pdev_request,将请求发送出去,进入到下层的调用,第三个步骤则是等待下层接收并处理请求获取reply。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 int uk_9pdev_request (struct uk_9pdev *dev, struct uk_9preq *req) { int rc; UK_ASSERT(dev); UK_ASSERT(req); if (UK_READ_ONCE(req->state) != UK_9PREQ_READY) { rc = -EINVAL; goto out; } if (dev->state != UK_9PDEV_CONNECTED) { rc = -EIO; goto out; } #if CONFIG_LIBUKSCHED uk_waitq_wait_event(&dev->xmit_wq, (rc = dev->ops->request(dev, req)) != -ENOSPC); #else do { rc = dev->ops->request(dev, req); } while (rc == -ENOSPC); #endif out: return rc; }

到了uk_9p_request中,首先是判断了一下req和设备的状态是否良好,然后马上就调用了下层的dev->ops->request(dev, req)。而该request的底层实现有两个地方:plat/drivers/virtio/virtio_9p.c和plat/xen/drivers/9p/9pfront.c,这里以前者为例作为介绍。

plat/virtio 上层dev的trans_ops的实现在plat/drivers/virtio/virtio_9p.c中,virtio_9p中实现了uk_9pdev_trans_ops中的三个操作,以及封装了uk_9pdev_trans结构体:

1 2 3 4 5 6 7 8 9 10 11 static const struct uk_9pdev_trans_ops v9p_trans_ops = .connect = virtio_9p_connect, .disconnect = virtio_9p_disconnect, .request = virtio_9p_request }; static struct uk_9pdev_trans v9p_trans = .name = "virtio" , .ops = &v9p_trans_ops, .a = NULL };

可以看出上层的dev->ops->request(dev, req)对应的就是这里的virtio_9p_request函数

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 static int virtio_9p_request (struct uk_9pdev *p9dev, struct uk_9preq *req) { struct virtio_9p_device *dev ; int rc, host_notified = 0 ; unsigned long flags; size_t read_segs, write_segs; bool failed = false ; UK_ASSERT(p9dev); UK_ASSERT(req); UK_ASSERT(UK_READ_ONCE(req->state) == UK_9PREQ_READY); uk_9preq_get(req); dev = p9dev->priv; ukplat_spin_lock_irqsave(&dev->spinlock, flags); uk_sglist_reset(&dev->sg); rc = uk_sglist_append(&dev->sg, req->xmit.buf, req->xmit.size); if (rc < 0 ) { failed = true ; goto out_unlock; } if (req->xmit.zc_buf) { rc = uk_sglist_append(&dev->sg, req->xmit.zc_buf, req->xmit.zc_size); if (rc < 0 ) { failed = true ; goto out_unlock; } } read_segs = dev->sg.sg_nseg; rc = uk_sglist_append(&dev->sg, req->recv.buf, req->recv.size); if (rc < 0 ) { failed = true ; goto out_unlock; } if (req->recv.zc_buf) { uint32_t recv_size = req->recv.size + req->recv.zc_size; rc = uk_sglist_append(&dev->sg, req->recv.zc_buf, req->recv.zc_size); if (rc < 0 ) { failed = true ; goto out_unlock; } if (recv_size < UK_9P_RERROR_MAXSIZE) { uint32_t leftover = UK_9P_RERROR_MAXSIZE - recv_size; rc = uk_sglist_append(&dev->sg, req->recv.buf + recv_size, leftover); if (rc < 0 ) { failed = true ; goto out_unlock; } } } write_segs = dev->sg.sg_nseg - read_segs; rc = virtqueue_buffer_enqueue(dev->vq, req, &dev->sg, read_segs, write_segs); if (likely(rc >= 0 )) { UK_WRITE_ONCE(req->state, UK_9PREQ_SENT); virtqueue_host_notify(dev->vq); host_notified = 1 ; rc = 0 ; } out_unlock: if (failed) uk_pr_err(DRIVER_NAME": Failed to append to the sg list.\n" ); ukplat_spin_unlock_irqrestore(&dev->spinlock, flags); if (!host_notified) uk_9preq_put(req); return rc; }

代码很长,上面的代码简单来说分为几步:

1、将req->xmit.buf和req->xmit.size(请求头)传入sglist

2、如果req->xmit.zc_buf非空,说明该操作是写操作,要将req->xmit.zc_buf和req->xmit.zc_size(上面要写的数据)传入sglist中

3、将当前的segment数量存储到read_segs中(至于为什么是read_segs,我猜想可能是因为req->xmit.buf和req->xmit.zc_buf都是携带上层数据数据传来的,所以下层的需要对这些上层数据进行读操作。)

4、将req->recv.buf和req->recv.size(响应头的两个参数,方便下层将响应写入recv.buf中)传入sglist中

5、如果req->recv.zc_buf非空,说明该操作是读操作,要将req->recv.zc_buf和req->recv.zc_size(读出来的数据要放置的地方)传入sglist中

6、将read_segs之后的segment数量存储到write_segs中(至于为什么是write_segs,我猜想可能是因为req->recv.buf和req->recv.zc_buf传入下层之后,下层需要对这两个缓冲区分别进行写操作。)

7、最后将req,dev的sglist,read_segs以及write_segs传入dev所对应的virtqueue中,然后调用virtqueue_host_notify函数通知hostOS请求数据已发送。

而virtqueue_host_notify函数其实是对vq->vq_notify_host函数的一层封装:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 {virtio/virtqueue.h} static inline void virtqueue_host_notify (struct virtqueue *vq) { UK_ASSERT(vq); mb(); if (vq->vq_notify_host && virtqueue_notify_enabled(vq)) { uk_pr_debug("notify queue %d\n" , vq->queue_id); vq->vq_notify_host(vq->vdev, vq->queue_id); } }

vq_notify_host其实也是一个接口函数的定义,它的具体实现在virtio_pci.c中

1 2 167 vq = virtqueue_create(queue_id, num_desc, VIRTIO_PCI_VRING_ALIGN,168 callback, vpci_legacy_notify, vdev, a);

通过virtqueue_create函数将vpci_legacy_notify赋值给了vq_notify_host,作为了vq_notify_host的具体实现。

1 2 3 4 5 6 7 8 9 10 11 static int vpci_legacy_notify (struct virtio_dev *vdev, __u16 queue_id) { struct virtio_pci_dev *vpdev ; UK_ASSERT(vdev); vpdev = to_virtiopcidev(vdev); virtio_cwrite16((void *)(unsigned long ) vpdev->pci_base_addr, VIRTIO_PCI_QUEUE_NOTIFY, queue_id); return 0 ; }

而vpci_legacy_notify通过调用virtio_cwrite16函数将queue_id写向了底层,沿着virtio_cwrite16往下引用会发现在virtio_config中的_virtio_cwrite_bytes函数调用了ioreg_write系列函数,ioreg_write系列函数在plat/common/include/arm/arm64/cpu.h中定义:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 static inline void ioreg_write8 (const volatile uint8_t *address, uint8_t value) { asm volatile ("strb %w0, [%1]" : : "rZ" (value), "r" (address)) ; } static inline void ioreg_write16 (const volatile uint16_t *address, uint16_t value) { asm volatile ("strh %w0, [%1]" : : "rZ" (value), "r" (address)) ; } static inline void ioreg_write32 (const volatile uint32_t *address, uint32_t value) { asm volatile ("str %w0, [%1]" : : "rZ" (value), "r" (address)) ; }

这个ioreg_write涉及到一段汇编代码(?)目前意义未知,需要进一步探索。